Danger of Algorithmic Influence

“The fear of being influenced affects our sense of reality and our ability to trust our own judgments about what is true.”

Our dependence on Algorithmic Advice

We are targeted by algorithms everyday. Algorithms are influencing who is driving us (autonomous driving), where we go (routing), who we talk to (whose posts we see), what we read (filter bubbles of information), what medical treatment we get (Software as a Medical Device), who we marry (automated matching on date sites), which house we buy (credit worthiness), and even swaying us about who we vote for.

Recent research has demonstrated Google search results alone can easily shift more than 20% of undecided voters. With fifty percent of US presidential elections won by vote margins under 7.6%, this kind of manipulation can have a serious effect.

Now I am good with Google maps finding me the fastest route to my destination, I am not so sure about Google and other sites deciding what I read and who do I talk to. Filter bubbles and echo chambers can create more confirmation bias.

Are we getting conned in losing the right to self-determination?

“Social media isn’t a tool that’s just waiting to be used. It has its own goals, and it has its own means of pursuing them by using your psychology against you.””

In the age of computer algorithms that dictate which content is being recommended to us on YouTube, TikTok, FaceBook or Instagram, decision-making models can be manipulated by flawed algorithms and bad data. These persuasive technologies manipulate weaknesses in human psychology and are built to change our opinions, attitudes, or behaviors to meet its goals. Instead of us using these technologies as tools, they are using us.

The age old persuasion and con techniques are being converted to algorithms. For example, the possibility of new comments or "likes" keeps us compulsively monitoring for updates, seeking feelings of pleasure and reward. When everyone’s content get more and more likes, everyone ends up on these platforms far longer than they intended to. That is the old “Reciprocity and Reward” trick to influence behaviors. I bet you are familiar with “People who bought this also bought this” tactic which is not much different from the “herd principle” trick to con or persuade. A third of your choices in Amazon are driven by recommendations. We think we are “free” to make choices, but these recommendations and tricks are nudging us away from that freedom.

These algorithms take advantage of our automatic responses resulting in us taking in the undue influences not filtered by our critical thinking. We are becoming compliant to algorithms in return for likes or funny videos. We are becoming computers that are fed information by other human and artificial computers. We are becoming data and algorithm driven robots while losing the power of self-determination.

Can Algorithms make us dumber?

In his book, Gaspard Koenig “The End of the Individual: A Philosopher’s Journey to the Land of Artificial Intelligence”, he argues that society should be cautious about the power of AI not because it will destroy humanity (as some argue) but because it will erode our capacity for critical judgement.

A recent study found that people relying more on algorithmic advice as tasks become more difficult.

Algorithms are nudging us into thinking less. People rely so heavily on Google that they treat it as an external memory source, resulting in them being less able to remember searchable information. My wife calls it my “Google girlfriend” as I tend to listen to my “girlfriend” driving instructions more.

Algorithm transparency concerns

Algorithms are not objective. They simply embody the biases of their creator. They are basically recipes. The data science that supports these recipes is like a spice rack that can improve the taste. However, algorithms are imperfect and can do harm, even if the data is collected in a “fair” way. We must figure out how to make sure the data science is fair to everyone so that people are empowered to make informed choices about how their lives will be affected by the algorithm.

To build more trustworthy relationships with algorithms, can we require the companies to provide plain-language facts about what their algorithms do, such as: "By using an algorithm, we save you money," or, "By using an algorithm, we make fewer mistakes."?

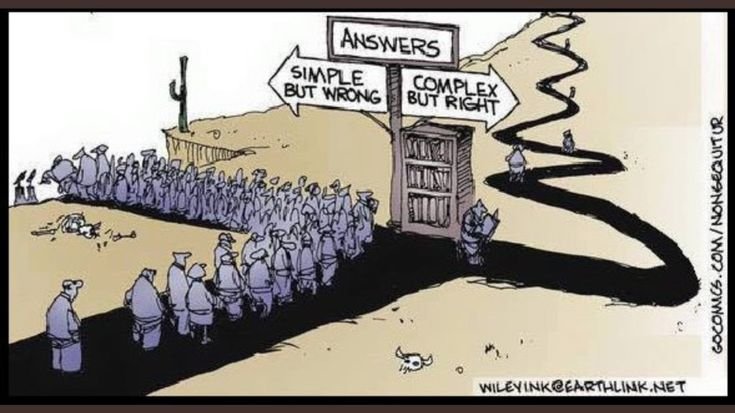

Answers are complex

Getting back the control with Awareness

The answer to how we solve for manipulation must be broader than just, “be wary of social media”.

Awareness is the key to avoiding being manipulated. The more aware we are of our surroundings, and the world around us, the less likely we are to be manipulated by others. In one study, over 50% of the participants shared fake news because they were not paying attention. The question of “what is true” is the most important question we can ever ask ourselves. That answer lies in the solitude of our own mind and not the company of others.

According to a study done by MIT media lab, people’s accuracy in detecting fake news through social media declined from 63 percent to 48 percent as the number of participants grew from three to five. The more people surrounding you, the less likely you are to be aware of your situation. So to live in a way that avoids manipulation we must first be aware.

It is not enough just to be aware. If we are aware of the disinformation that the world throws at us every day and we still succumb to it, it is because we are not practicing critical thinking. We are not making sure that we are making decisions based off of what is true, rather than what we perceive to be true. If we do not have a strong sense of critical thinking, it is natural to fall for manipulation. We must learn to be aware of how our minds operate, and how we can better protect ourselves from manipulation. Here are some ways to practice critical thinking :

Question everything you read and see.

What are some ways you use technology that are not aligned with your goals for yourself?

Understand that half-truths are easier to manipulate than whole truths.

Understand your own biases and find ways to identify them without being judgmental.

When you are presented with new information, do not accept it immediately. Just because an information source seems credible, does not mean it is true. Analyze the piece of information you are reading in a critical way.

Be wary of those who speak with high certainty and confidence.

Be wary of those who are vague and provide few details.

Be wary of information that is only relevant to your local area.

Be wary of information that is a shock tactic.

Be wary of information that fits into what you already believe.

Be aware of how you are personally vulnerable to manipulation.

We must be aware that our senses, thoughts and emotions can be manipulated, and we must learn to be more aware of the way we process information. Our world is changing, and we cannot just let it change. We must be aware of how our world is changing, and we must be aware that there is a war for our minds. We must be aware of how we are being impacted by that war, and we must be aware that the main battlefield of that war is the truth.

Bogert, E., Schecter, A. & Watson, R.T. Humans rely more on algorithms than social influence as a task becomes more difficult. Sci Rep 11, 8028 (2021